In terms of credit risk strategy, the lending markets in America and Britain undoubtedly lead the way while several other markets around the world are applying many of the same principles with accuracy and good results. However, for a number of reasons and in a number of ways, many more lending markets are much less sophisticated. In this article I will focus on these developing markets; discussing how credit risk strategies can be applied in such markets and how doing so will add value to a lender.

The fundamentals that underpin credit risk strategies are constant but as lenders develop in terms of sophistication the way in which these fundamentals are applied may vary. At the very earliest stages of development the focus will be on automating the decisioning processes; once this has been done the focus should shift to the implementation of basic scorecards and segmented strategies which will, in time, evolve from focusing on risk mitigation to profit maximisation.

Automating the Decisioning Process

The most under-developed markets tend to grant loans using a branch-based decisioning model as a legacy of the days of fully manual lending. As such, it is an aspect more typical of the older and larger banks in developing regions and one that is allowing newer and smaller competitors to enter the market and be more agile.

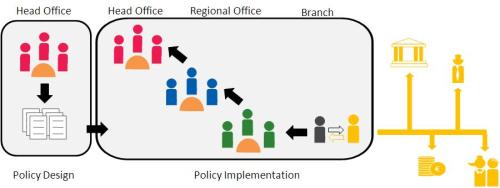

A typical branch-lending model looks something like the diagram below:

In a model like this, the credit policy is usually designed and signed-off by a committee of very senior managers working in the head-office. This policy is then handed-over to the branches for implementation; usually by delivering training and documentation to each of the bank’s branch managers. This immediately presents an opportunity for misinterpretation to arise as branch managers try to internalise the intentions of the policy-makers.

Once the policy has been handed-over, it becomes the branch manager’s responsibility to ensure that it is implemented as consistently as possible. However, since each branch manager is different, as is each member of branch staff, this is seldom possible and so policy implementation tends to vary to a greater or lesser extent across the branch network.

Even when the policy is well implemented though, the nature of a single written policy is such that it can identify the applicants that are considered too risky to qualify for a loan but it cannot go beyond that to segment accepted customers into risk groups. This means that the only way that senior management can ensure the policy is being implemented correctly in the highest risk situations is by using the size of the loan as an indication of risk. So, to do this a series triggers are set to escalate loan applications to management committees.

In this model, which is not an untypical one, there are three committees: one within the branch itself where senior branch staff review the work of the loan officer for small value loan applications; if the loan size exceeds the branch committee’s mandate though it must then be escalated to a regional committee or, if sufficiently large, all the way to a head-office committee.

Although it is easy to see how such a series of committees came into being, their on-going existence adds significant costs and delays to the application process.

In developing markets where skills are short there a significant premium must usually be paid to high quality management staff. So, to use the time of these managers to essentially remake the same decision over-and-over (having already decided on the policy, they now need to repeatedly decide whether an application meets the agreed upon criteria) is an inefficient way to invest a valuable resource. More importantly though are the delays that must necessarily accompany such a series of committees. As an application is passed on from one team – and more importantly from one location – to another a delay is incurred. Added to this is the fact that committees need to convene before they can make a decision and usually do so on fixed dates meaning that a loan application may have to wait a number of days until the next time the relevant committee meets.

But the costs and delays of such a model are not only incurred by the lender, the borrower too is burdened with a number of indirect costs. In order to qualify for a loan in a market where impartial third-party credit data is not widely available – i.e. where there are no strong and accurate credit bureaus – an applicant typically needs to ‘over prove’ their risk worthiness. Where address and identification data is equally unreliable this requirement is even more burdensome. In a typical example an applicant might need to first show an established relationship with the bank (6 months of salary payments, for example); provide a written undertaking from their employer that they will notify the bank of any change in employment status; the address of a reference who can be contacted when the original borrower can not; and often some degree of security, even for small value loans.

These added costs serve to discourage lending and add to what is usually the biggest problem faced by banks with a branch-based lending model: an inability to grow quickly and profitably.

Many people might think that the biggest issue faced by lenders in developing markets is the risk of bad debt but this is seldom the case. Lenders know that they don’t have access to all the information they need when they need it and so they have put in place the processes I’ve just discussed to mitigate the risk of losses. However, as I pointed out, those processes are ungainly and expensive. Too ungainly and too expensive as it turns out to facilitate growth and this is what most lenders want to change as they see more agile competitors starting to enter their markets.

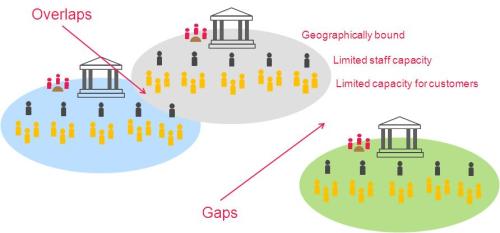

A fundamental problem with growing with a branch-based lending model is that the costs of growing the system rise in line with the increase capacity. So, to serve twice as many customers will cost almost twice as much. This is the case for a few reasons. Firstly, each branch serves only a given geographical catchment area and so to serve customers in a new region, a new branch is likely to be needed. Unfortunately, it is almost impossible to add branches perfectly and each new branch is likely to lead to either an inefficient overlapping of catchment areas or ineffective gaps. Secondly, within the branch itself there is a fixed capacity both in terms of the number of staff it can accommodate and in terms of the number of customers each member of staff can serve. Both of these can be adjusted, but only slightly.

Added to this, such a model does not easily accommodate new lending channels. If, for example, the bank wished to use the internet as a channel it would need to replicate much of the infrastructure from the physical branches in the virtual branch because, although no physical buildings would be required and the coverage would be universal, the decisioning process would still require multiple loan officers and all the standard committees.

To overcome this many lenders have turned to agency agreements, most typically with large private and government employers. These employers will usually handle the administration of loan applications and loan payments for their staff and in return will either expect that their staff are offered loans at a discounted rate or that they themselves are compensated with a commission.

By simply taking the current policy rules from the branch based process and converting them into a series of automated rules in a centralised system many of these basic problems can be overcome; even before improving those rules with advanced statistical scorecards. Firstly the gap between policy design and policy implementation is removed, removing any risk of misinterpretation. Then the need for committees to ensure proper policy implementation is greatly reduced, greatly reducing the associated costs and delays. Thirdly the risk of inconsistent application is removed as every application, regardless of the branch originating it or the staff member capturing the data, is treated in the same way. Finally, since the decisioning is automated there is almost no cost to add a new channel onto the existing infrastructure meaning that new technologies like internet and mobile banking can be leveraged as profitable channels for growth.

The Introduction of Scoring

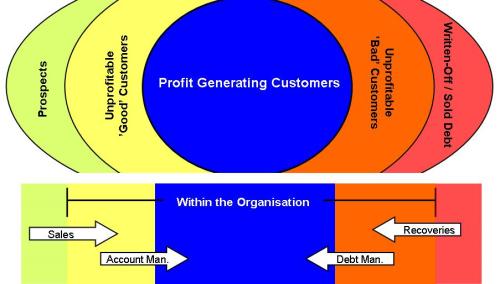

With the basic infrastructure in place it is time to start leveraging it to its full advantage by introducing scorecards and segmented strategies. One of the more subtle weaknesses of a manual decision is that it is very hard to use a policy to do anything other than decline an account. As soon as you try to make a more nuanced decision and categorise accepted accounts into risk groups the number of variables increases too fast to deal with comfortably.

It is easy enough to say that an application can be accepted only if the applicant is over 21 years of age, earns more than €10 000 a year and has been working for their current employer for at least a year but how do you segment all the qualifying applications into low, medium and high risk groups? A low risk customer might be one that is over 35 years old, earns more than €15 000 and has been working at their current employer for at least a year; or one that is over 21 years old but who earns more than €25 000 and has been working at their current employer for at least two years; or one that is over 40 years old, earns more than €15 000 and has been working at their current employer for at least a year, etc.

It is too difficult to manage such a policy using anything other than an automated system that uses a scorecard to identify and segment risk across all accounts. Being able to do this allows a bank to begin customising its strategies and its products to each customer segment/ niche. Low risk customers can be attracted with lower prices or larger limits, high spending customers can be offered a premium card with more features but also with higher fees, etc.

The first step in the process would be to implement a generic scorecard; that is a scorecard built using pooled third-party data that relates to a portfolio that is similar to the one in which it is to be implemented. These scorecards are cheap and quick to implement and, as when used to inform only simple strategies, offer almost as much value as a fully bespoke scorecard would. Over time the data needed to build a more specific scorecard can be captured so that the generic scorecard can be replaced after eighteen to twenty-four months.

But the making of a decision is not the end goal; all decisions must be monitored on an on-going basis so that strategy changes can be implemented as soon as circumstances dictate. Again this is not something that is possible to do using a manual system where each review of an account’s current performance tends to involve as much work as the original decision to lend to that customer did. Fully fledged behavioural scorecards can be complex to build for developing banks but at this stage of the credit risk evolution a series of simple triggers can be sufficient. Reviewing an account in an automated environment is virtually instantaneous and free and so strategy changes can be implemented as soon as they are needed: limits can be increased monthly to all low risk accounts that pass a certain utilisation trigger, top-up loans can be offered to all low and medium risk customers as soon as their current balances fall below a certain percentage of the original balance, etc.

In so doing, a lender can optimise the distribution of their exposure; moving exposure from high risk segments to low risk segments or vice versa to achieve their business objectives. To ensure that this distribution remains optimised the individual scores and strategies should be consistently tested using champion/ challenger experiments. Champion/ challenger is always a simple concept and can be applied to any strategy provided the systems exist to ensure that it is implemented randomly and that its results are measurable. The more sophisticated the strategies, the more sophisticated the champion/ challenger experiments will look but the underlying theory remains unchanged.

Elevating the Profile of Credit Risk

Once scorecards and risk segmented strategies have been implemented by the credit risk team, the team can focus on elevating their profile within the larger organisation. As credit risk strategies are first implemented they are unlikely to interest the senior managers of a lender who would likely have come through a different career path: perhaps they have more of a financial accounting view of risk or perhaps they have a background in something completely different like marketing. This may make it difficult for the credit risk team to garner enough support to fund key projects in the future and so may restrict their ability to improve.

To overcome this, the credit team needs to shift its focus from risk to profit. The best result a credit risk team can achieve is not to minimise losses but to maximise profits while keeping risk within an acceptable band. I have written several articles on profit models which you can read here, here, here and here but the basic principle is that once the credit risk department is comfortable with the way in which their models can predict risk they need to understand how this risk contributes to the organisation’s overall profit.

This shift will typically happen in two ways: as a change in the messages the credit team communicates to the rest of the organisation and as a change in the underlying models themselves.

To change the messages being communicated by the credit team they may need to change their recruitment strategies and bring in managers who understand both the technical aspects of credit risk and the business imperatives of a lending organisation. More importantly though, they need to always seek to translate the benefit of their work from technical credit terms – PD, LGD, etc. – into terms that can be more widely understood and appreciated by senior management – return on investment, reduced write-offs, etc. A shift in message can happen before new models are developed but will almost always lead to the development of more business-focussed models going forward.

So the final step then is to actually make changes to the models and it is by the degree to which such specialised and profit-segmented models have been developed and deployed that a lenders level of sophistication will be measured in more sophisticated markets.