When it comes to the application of statistical models in the lending environment, the majority of the effort is dedicated to calculating the risk of bad debt; relatively little effort is dedicated to calculating the risk of fraud.

There are several good reasons for that, the primary one being that credit losses have a much larger impact on a lender’s profit. Fraud losses tend to be restricted to certain situations and certain products: application fraud might affect many unsecured lending products but it does so to a lesser degree than total credit losses, while transactional fraud is typically restricted to card products.

I discuss application fraud in more detail in another article so in this one I will focus on modeling for transactional fraud and, in particular, how the assumptions underpinning these models vary from those underpinning traditional behavioural scoring models.

Credit Models

The purpose of most credit models is to forecast future behaviour. Since the future of any particular account can’t be known, they do this by matching an account to a similar group of past accounts and making the assumption that this customer will behave in the same way as those customers did. In other words, they ask the questions of each account, ’how much does this look like all previous known-bad accounts?’.

So if the only thing we know about a customer is that they are 25 years old and married, a credit model will typically look at the behaviour of all previous 25-year-old married customers and assume that this customer will behave in the same way going forward.

The more sophisticated the model, the more accurate the matching; and the more accurate the matching between the current and past customers, the more valid the transfer of the latter group’s future behaviour to the former will be.

Imagine the example below where numerical characteristics have been replaced with illustrative ones. Here there are three customer groups: high risk, medium risk and low risk. A typical low risk customer is blue with stars, while a high risk customer is red with circles and a medium risk customer is green with a diamonds.

A basic model would look at any new customer, in this case green with stars, and assign them to the group they most closely matched – medium risk – and assume the associated outcome – a 3% bad rate. A more sophisticated model would calculate the relative importance of the colour versus the shapes in predicting risk and would forecast an outcome somewhere between the medium and low risk outcomes.

An over-simplification but the concept holds well enough to suffice for this article.

The key difficulty a credit model has to overcome is that it needs to forecast an unknown future based on a limited amount of data. This forces the model to group similar accounts and to treat them as the same. To extend the metaphor from above, few low risk accounts would actually have been blue with stars; there would have been varying shades of blue and varying star-like shapes. Yet it is impossible to model each account separately so they would have been grouped together using the best possible description of them as a whole.

Transactional fraud models need not be so tightly bound by this requirement, though the extra flexibility that this allows is often over-looked by analysts too set in the traditional ways.

Transactional Fraud Models

Many transactional fraud models take the credit approach and ask ’how much does this transaction look like a typical fraud transaction?’. In other words, they start by separating all transactions into ‘fraud’ and ‘non fraud’ groups, identifying a ‘typical’ fraud transaction and then comparing each new transaction to that template.

However, rather than only asking the question ’how much does this look like a typical fraud transaction?’, a fraud model can also ask ’how much does this look like this cardholder’s typical transaction?’.

A transactional fraud model does not need to group customers or transactions together to get a view of the future, it simply needs to identify a transaction that does not meet a specific customer’s established spend pattern. Assume a typical fraud case involves six transactions in a day, each of a value between €50 and €500 and with the majority of them occurring in electronic stores. A credit-style model might create an alert whenever a card received its sixth transaction in a day totaling at least €300 or when it received its third transaction from an electronic store. However, if it was known that the cardholder in question had not previously used their card more than twice in a single day and had never bought goods at any of the stores visited, that same alert might have been triggered earlier and been attached to a higher probability of fraud.

A large percentage of genuine spend on a credit cards is recurring; that is to say it happens at the same merchants month in and month out. In a project on this subject, I found that an average of 50% of genuine transactions occurred at merchants that the cardholder had visited at least once in the previous six months (that number doesn’t drop much when one uses only the previous three months). Some merchant categories are more susceptible to repeat purchases than others but this can be catered for during the modeling process. For example you probably buy your groceries at one of three or four stores every week but you might frequently try a new restaurant.

The majority of high value fraud is removed from the customer by time and geography. A card might be ’skimmed’ at a restaurant in London but that data might then be emailed to America or Asia where, a month later, it is converted into a new card to be used by a fraudster. This means that fraudsters seldom know the genuine customer’s spend history and so matching their fraudulent spend to the established patterns is nearly impossible. In the same project, over 95% of fraud occurred at merchants that the genuine cardholder had not visited in the previous six months. Simply applying a binary cut-off based on whether the merchant in questions was a regular merchant would lead to a near doubling of hit rates from the existing rule set.

Maintaining Customer Histories

The standard approach to implementing a customer-specific history would be as illustrated above. In the live environment new transactions are compared to the historical record and are flagged if the merchant is new or, in more sophisticated cases, if the transaction value exceeds merchant-level cut-offs. The fact that this is outside of history is used as a prioritisation with other fraud rules to create alerts. Then later, in a batch run, the history is updated with the data relating to new merchants and changes to merchant-level patterns. If only a specific period worth of data is stored, then older data is dropped-off at this stage. This is commonly done to improve response times with three to six months worth of data usually being enough.

Customer-specific patterns like this are not enough to indicate fraud but, when used in conjunction with an existing rule set in this way they can add significant value.

There are of course some downsides to this approach, primarily the amount of data that needs to be stored and accessed. This is particularly true if your fraud rules are triggered during the authorisations process. In these cases it may be necessary to sacrifice fraud risk for performance by using only basic rules in the authorizations system followed by the full rule set in the reactionary fraud detection system. Most card issuers follow this sort of approach where the key goal of authorisations is good customer service through fast turn-around times rather than fraud prevention.

The amount of data stored and accessed should be matched to each issuer’s data processing capabilities. As mentioned earlier, simply accessing a rolling list of previously visited merchants can double the hit rate of existing rules and is not a data-intensive process. Including average values, GIS or other value-added data will surely improve the rule hit rates even further but will do so with added processing costs.

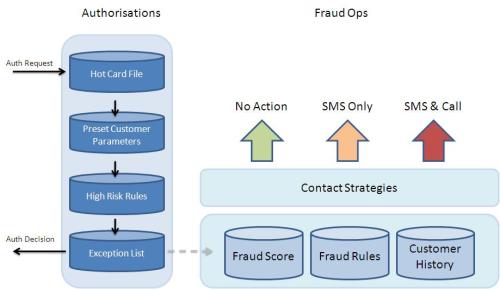

The typical implementation would look like the diagram below:

In this set-up, customer history is not queried live but is rather used to update a series of specific fields such as customer parameters and an exception file. The customer parameters would be related to the value of spend typical to any one customer and could be updated daily or weekly – even monthly updates will be alright if sufficient leeway is included when these are calculated. An exception file will include specific customers to whom the high risk fraud rules should not apply. This is usually done to allow frequent high risk spenders or frequent users of high risk merchant types – often casinos – to spend without continuously hitting fraud rules.

Once an authorization decision has been made, that data is passed into the offline environment where it passes through a series of fraud rules and sometimes a fraud score. It is in this environment that the most value can be attained from the addition of a customer-specific history. Because this is an offline environment, there is more time to query larger data sets and to use that information to prioritise contact strategies which should always include the use of SMS alerts as described here.

Here the fact that a transaction has fallen outside of the historical norm will be used as an input into other rules. For example, if there have been more than three transactions on an account in a day and at least two of those were at new merchants, a phone call is queued.